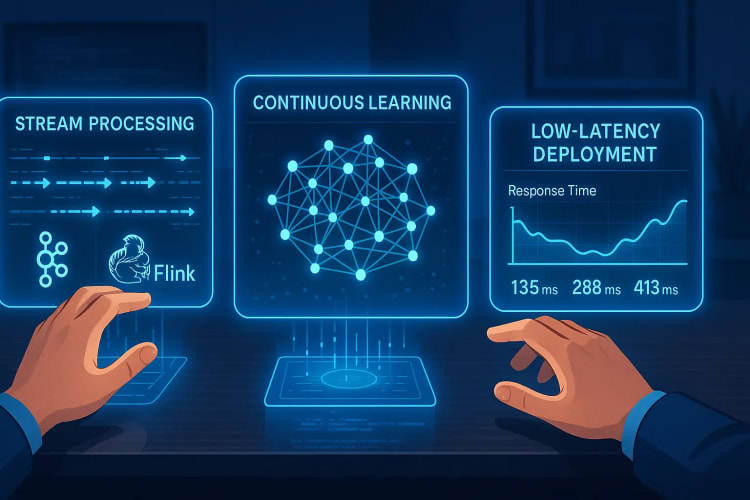

Building Real-Time AI Applications: A Developer's Guide to Stream Processing, Continuous Learning, and Low-Latency Deployments

In today's data-driven world, the ability to process information and make decisions in real-time has become a competitive advantage across industries. Real-time AI applications represent the cutting edge of this capability, combining stream processing, machine learning, and optimized deployments to deliver immediate insights and actions. Whether you're building fraud detection systems, predictive maintenance tools, or real-time recommendation engines, understanding how to architect and implement these systems is becoming an essential skill for modern developers.

According to recent market projections, the real-time analytics sector is expected to grow from $22.11 billion in 2023 to an impressive $60.55 billion by 2028. This explosive growth reflects the increasing importance of instantaneous data processing across sectors like finance, healthcare, retail, and manufacturing.

In this comprehensive guide, we'll walk through everything you need to know to build production-ready real-time AI applications, from choosing the right stream processing framework to implementing continuous learning and optimizing for minimal latency.

Understanding Real-Time AI Applications

Real-time AI applications leverage streaming data to provide immediate insights and actions through specialized processing frameworks. Unlike traditional batch processing systems that analyze data in chunks after collection, real-time systems continuously analyze incoming data streams, making decisions in milliseconds rather than hours or days.

As Dr. Jennifer Prendki, a respected data scientist in the field, notes: "Stream processing is the backbone for any real-time AI application, allowing for immediate data feedback loops."

Key Components of Real-Time AI Systems

A comprehensive real-time AI application typically consists of:

- Data Ingestion Layer: Captures and routes streaming data from various sources

- Stream Processing Engine: Processes data flows continuously as they arrive

- Machine Learning Component: Applies AI models to extract insights or make predictions

- Continuous Learning Mechanism: Updates models based on new data

- Low-Latency Serving Infrastructure: Delivers results quickly to end-users or downstream systems

Contrary to popular belief, real-time processing isn't only relevant for large organizations with massive data volumes. Even small-scale applications can benefit significantly from stream processing, with appropriately sized infrastructure.

Stream Processing Frameworks: The Foundation

The backbone of any real-time AI application is its stream processing framework. Let's examine the most popular options and their unique strengths:

Apache Kafka

Kafka has emerged as the de facto standard for building data streaming pipelines, serving as the central nervous system for many real-time applications.

Key features:

- Distributed publish-subscribe messaging system

- High throughput capable of handling millions of messages per second

- Fault tolerance with replication across multiple servers

- Data persistence with configurable retention

- Robust ecosystem with Kafka Connect for integrations and Kafka Streams for processing

Best suited for: Applications requiring high throughput, durability, and integration with multiple systems

Apache Flink

Flink provides a unified framework for batch and stream processing with precise event time processing capabilities.

Key features:

- Stateful computations with exactly-once semantics

- Advanced windowing capabilities (time, count, session)

- Event time processing for handling late and out-of-order data

- Low latency with high throughput

- Built-in machine learning library (FlinkML)

Best suited for: Complex event processing scenarios requiring precise time handling and state management

Apache Spark Streaming

Spark's micro-batch architecture offers a balance between ease of use and performance for real-time analytics.

Key features:

- Integration with the broader Spark ecosystem (SQL, MLlib)

- Micro-batch processing model

- Unified API for batch and stream processing

- Rich machine learning library integration

- Interactive development with notebooks

Best suited for: Applications that combine batch and stream processing or require advanced analytics capabilities

Other Notable Frameworks

- Amazon Kinesis: Fully managed AWS service for real-time data streaming

- Google Dataflow: Serverless stream and batch processing service

- Apache Pulsar: Distributed pub-sub messaging system with multi-tenancy support

- Apache Storm: Distributed real-time computation system

Choosing the Right Framework

When selecting a stream processing framework, consider these factors:

- Latency requirements: How quickly do you need to process each event?

- Throughput needs: What volume of data will you process?

- Processing semantics: Do you need exactly-once, at-least-once, or at-most-once processing?

- State management: Do your computations require maintaining state?

- Integration requirements: What systems will your pipeline connect to?

- Team expertise: What technologies is your team familiar with?

For most use cases, a combination of Apache Kafka for data ingestion and either Flink or Spark for processing provides a robust foundation. If you're building on cloud infrastructure, managed services like Amazon Kinesis or Google Dataflow can reduce operational overhead.

Continuous Learning in Real-Time AI

One of the key challenges in deploying AI models in production is ensuring they remain accurate as data patterns evolve. In real-time applications, this challenge is amplified by the continuous nature of data streams. Continuous learning addresses this by allowing models to adapt over time based on new data inputs.

As noted by Dr. Sami A. Abou El-Ela from Confluent, "Integrating AI with streaming data allows for enhanced model accuracy and operational efficiency."

Implementation Approaches

There are several approaches to implementing continuous learning in real-time AI systems:

1. Online Learning

Models update incrementally with each new data point or batch of points. This approach is ideal for applications where data patterns change rapidly.

Implementation considerations:

- Choose algorithms designed for online learning (linear models, decision trees, some neural networks)

- Implement safeguards against catastrophic forgetting

- Monitor for concept drift and performance degradation

2. Periodic Retraining

Models retrain at scheduled intervals using accumulated data. This approach balances adaptation with stability.

Implementation considerations:

- Determine optimal retraining frequency based on domain knowledge

- Implement efficient data storage for historical information

- Create automated pipelines for retraining and deployment

3. Champion-Challenger Model

New models are tested against current ones before deployment, ensuring improvements before switching. This approach reduces the risk of deploying underperforming models.

Implementation considerations:

- Define clear evaluation metrics for model comparison

- Implement A/B testing infrastructure

- Create automated promotion mechanisms

If you're new to ML deployment workflows, our guide on MLOps essentials provides a solid foundation for establishing effective continuous learning pipelines.

Monitoring and Feedback Loops

Continuous learning requires robust monitoring to detect when models need updating:

- Data drift detection: Monitor input distributions for changes

- Performance monitoring: Track accuracy, precision, recall, and other metrics

- Feedback collection: Capture user interactions and corrections

- Anomaly detection: Identify unusual predictions or system behaviors

Low-Latency Deployment Strategies

For real-time AI applications, low latency is often a critical requirement. Industry benchmarks show that top-performing organizations implementing stream processing report an average latency reduction from minutes to sub-second responses during peak loads.

Infrastructure Optimization

The foundation of low-latency deployments starts with optimized infrastructure:

- Edge computing: Process data closer to the source to reduce network latency

- In-memory computing: Minimize disk I/O operations by keeping data in RAM

- Horizontal scaling: Distribute processing across multiple nodes

- Hardware acceleration: Utilize GPUs, FPGAs, or specialized AI chips for inference

- Network optimization: Minimize hops and maximize bandwidth between components

Software Design Principles

How you architect your application significantly impacts latency:

- Asynchronous processing: Use non-blocking operations to maximize throughput

- Event sourcing: Maintain an immutable log of all events for reliable processing

- Caching strategies: Cache frequent predictions or intermediate results

- Load balancing: Distribute requests evenly across processing units

- Microservices architecture: Decompose applications into independently scalable services

For more advanced deployment scenarios, consider exploring cloud-native AI with Kubernetes, which offers powerful tools for scaling and managing distributed AI applications.

Model Optimization Techniques

The ML models themselves can be optimized for faster inference:

- Model quantization: Reduce precision of weights to speed up computation

- Model pruning: Remove unnecessary connections in neural networks

- Model distillation: Train smaller models to mimic larger ones

- Feature selection: Focus on the most predictive features

- Optimized inference engines: Use ONNX Runtime, TensorRT, or similar tools

Practical Implementation: Building a Real-Time AI Pipeline

Let's walk through setting up a basic real-time AI application using Apache Kafka and Python for fraud detection:

Step 1: Set Up Kafka for Stream Processing

First, establish your data ingestion pipeline with Kafka:

# Install required packages

pip install confluent-kafka pandas scikit-learn

# Create Kafka producer for incoming transaction data

from confluent_kafka import Producer

import json

conf = {'bootstrap.servers': 'localhost:9092'}

producer = Producer(conf)

def delivery_report(err, msg):

if err is not None:

print(f'Message delivery failed: {err}')

else:

print(f'Message delivered to {msg.topic()} [{msg.partition()}]')

# Example: Send transaction data to Kafka

def send_transaction(transaction_data):

producer.produce('transactions',

json.dumps(transaction_data).encode('utf-8'),

callback=delivery_report)

producer.flush()

Step 2: Implement a Streaming Consumer with ML Integration

Next, create a consumer that processes transactions and applies a fraud detection model:

from confluent_kafka import Consumer

import joblib

import json

# Load pre-trained fraud detection model

model = joblib.load('fraud_model.pkl')

# Set up Kafka consumer

conf = {

'bootstrap.servers': 'localhost:9092',

'group.id': 'fraud-detection-group',

'auto.offset.reset': 'earliest'

}

consumer = Consumer(conf)

consumer.subscribe(['transactions'])

# Process streaming transactions

while True:

msg = consumer.poll(1.0)

if msg is None:

continue

if msg.error():

print(f"Consumer error: {msg.error()}")

continue

# Parse transaction data

transaction = json.loads(msg.value().decode('utf-8'))

# Extract features for prediction

features = extract_features(transaction)

# Make prediction

fraud_probability = model.predict_proba([features])[0][1]

# Take action based on prediction

if fraud_probability > 0.8:

alert_security_team(transaction, fraud_probability)

# Update model (simplified example of online learning)

if 'feedback' in transaction:

update_model(model, features, transaction['feedback'])

Step 3: Deploy for Low-Latency Performance

To optimize for low latency, consider containerizing your application and deploying it close to your data sources. For more detailed guidance on packaging ML models for production, check out our article on transforming models into microservices.

# Example Dockerfile for the consumer service

FROM python:3.9-slim

WORKDIR /app

COPY requirements.txt .

RUN pip install --no-cache-dir -r requirements.txt

COPY fraud_model.pkl .

COPY consumer.py .

CMD ["python", "consumer.py"]

Real-World Applications and Case Studies

Financial Fraud Detection

A major financial institution implemented a real-time fraud detection system using Kafka and Flink, reducing fraud detection time from minutes to milliseconds. The system processes thousands of transactions per second, applying machine learning models to identify potentially fraudulent patterns. By implementing continuous learning, the system adapts to new fraud patterns, significantly reducing false positives compared to static rule-based systems.

Manufacturing Predictive Maintenance

A manufacturing company deployed sensors across its factory floor, streaming data to a real-time analytics platform built on Apache Kafka and Spark Streaming. The system predicts equipment failures 24-48 hours before they occur, allowing for scheduled maintenance instead of emergency repairs. This implementation reduced unplanned downtime by 35% and maintenance costs by 25%.

E-commerce Recommendation Engine

An online retailer built a real-time recommendation system that analyzes customer browsing behavior as it happens. Using Kafka for data ingestion and a custom ML pipeline for processing, the system delivers personalized product recommendations within 50 milliseconds of a customer action. This real-time personalization increased conversion rates by 15% and average order value by 7%.

Common Challenges and Solutions

Data Quality and Preprocessing

Challenge: Stream processing requires clean, well-structured data, but real-world data sources often contain inconsistencies, missing values, or errors.

Solution: Implement data validation at the ingestion layer, use schema registries to enforce data contracts, and design robust error handling for malformed data. Consider using frameworks like Apache Beam that provide built-in data quality transformations.

Scaling and Performance Bottlenecks

Challenge: As data volumes grow, many real-time systems hit performance bottlenecks that increase latency.

Solution: Design for horizontal scalability from the start, implement data partitioning strategies, use back-pressure mechanisms to handle load spikes, and continuously monitor performance metrics to identify bottlenecks early.

Model Drift and Accuracy Degradation

Challenge: ML models in production tend to degrade over time as data patterns evolve.

Solution: Implement robust monitoring for data and concept drift, establish automated retraining pipelines, use champion-challenger approaches for model updates, and maintain feedback loops for continuous improvement.

Frequently Asked Questions

What are the best frameworks for real-time AI applications?

The most widely adopted frameworks for real-time AI applications include Apache Kafka for data ingestion, Apache Flink or Spark Streaming for processing, and TensorFlow Serving or ONNX Runtime for model serving. The best choice depends on your specific requirements for latency, throughput, and processing semantics. For cloud-native deployments, managed services like AWS Kinesis, Google Dataflow, or Azure Stream Analytics offer reduced operational overhead.

How do I implement low-latency processing in my AI systems?

Low-latency processing requires optimization at multiple levels: infrastructure (use edge computing, in-memory processing, and hardware acceleration), architecture (implement asynchronous processing, microservices, and effective caching), and model optimization (apply quantization, pruning, and distillation techniques). Start by identifying and addressing your current bottlenecks through comprehensive performance profiling.

What is continuous learning and how does it apply to AI?

Continuous learning is an approach where AI models evolve over time based on new data. In traditional ML workflows, models are trained once and deployed statically, potentially becoming outdated as data patterns change. Continuous learning addresses this by implementing feedback loops that allow models to adapt, either through online learning (incremental updates), periodic retraining, or champion-challenger approaches where new models are tested against current ones before deployment.

Can real-time stream processing be used in small applications?

Absolutely. While stream processing frameworks like Kafka and Flink can scale to handle massive data volumes, they're also suitable for smaller applications. For small-scale use cases, you can run these frameworks on modest hardware or use lightweight alternatives like Redis Streams or even simple message queues combined with worker processes. The principles of stream processing apply regardless of scale, and the benefits of real-time insights can be valuable even for applications with lower data volumes.

How do I set up a Kafka stream for real-time processing?

Setting up a Kafka stream involves: (1) Installing and configuring Kafka brokers, (2) Creating topics to organize your data streams, (3) Implementing producers to send data to Kafka, (4) Developing consumers to process the data, and (5) Optionally using Kafka Streams or Kafka Connect for more advanced processing and integrations. For development, you can use Docker to quickly spin up a Kafka environment, and for production, consider managed services like Confluent Cloud or AWS MSK to reduce operational complexity.

What are common challenges in deploying real-time applications?

Common challenges include ensuring data quality in streaming contexts, managing state in distributed systems, handling late or out-of-order data, scaling to meet performance requirements, implementing effective monitoring and alerting, and maintaining system reliability under varying load conditions. Additionally, organizational challenges often arise around skills gaps, operational responsibilities, and integration with existing systems and processes.

What should I consider when choosing a stream processing framework?

Key considerations include: latency requirements (how quickly you need to process events), throughput needs (volume of data), processing semantics (exactly-once vs. at-least-once processing), state management capabilities, fault tolerance, scalability, integration with other systems, operational complexity, and team expertise. Also consider whether you prefer a managed service or self-hosted solution, and whether you need batch processing capabilities alongside streaming.

How to optimize AI models for real-time performance?

Model optimization techniques include: quantization (reducing numerical precision), pruning (removing unnecessary connections in neural networks), knowledge distillation (training smaller models to mimic larger ones), feature selection (focusing on the most predictive features), model-specific optimizations (like using convolutional operations efficiently), and leveraging specialized inference engines like TensorRT, ONNX Runtime, or TensorFlow Lite. Additionally, consider pre-computing and caching results for common inputs and partitioning your models to enable parallel processing.

Conclusion

Building real-time AI applications represents one of the most exciting and challenging areas in modern software development. By combining stream processing frameworks, continuous learning capabilities, and low-latency deployment strategies, developers can create systems that deliver immediate value from data streams across industries.

The journey to successful real-time AI implementation requires thoughtful architecture decisions, attention to performance optimization, and a commitment to continuous improvement. Start by clearly defining your use case and requirements, then select the appropriate frameworks and tools that align with your team's expertise and operational capabilities.

As the market for real-time analytics continues its explosive growth, the skills to build these systems will become increasingly valuable. Whether you're developing fraud detection systems, predictive maintenance applications, or personalized recommendation engines, the principles outlined in this guide provide a foundation for success in the dynamic world of real-time AI.

What real-time AI applications are you building? Share your experiences and challenges in the comments below!